Swarms of AI bots could influence people’s beliefs, threatening democracy

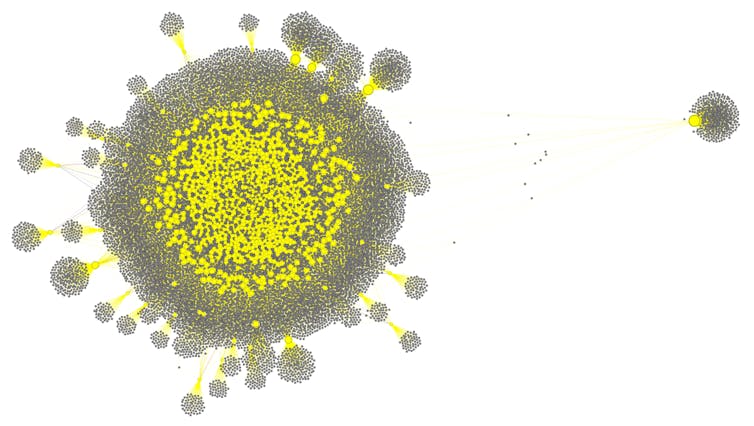

In mid-2023, around the time Elon Musk renamed Twitter to X, but before he stopped free academic access to the platform’s data, my colleagues said And I I looked for signs Social bot Accounts that post content generated by artificial intelligence. Social bots are artificial intelligence programs that produce content and interact with people on social media. We have discovered a network of over a thousand bots involved in cryptocurrency scams. We called this a name Robots “Fox8”. Yet one of the fake news sites designed to amplify it.

We were able to identify these accounts because the programmers were a bit sloppy: they didn’t catch occasional posts containing self-revealing text generated by ChatGPT, such as when the AI model refused to comply with claims that violated its terms. The most common self-revealing response was “I’m sorry, but I can’t comply with this request because it violates OpenAI’s content policy on creating harmful or inappropriate content. As an AI language model, my answers should always be respectful and appropriate for all audiences.”

We think fox8 was only the tip of the iceberg because better programmers could filter out revealing posts or use fine-tuned open source AI models to remove ethical barriers.

fox8 bots created fake interaction with each other and with human accounts through real-life discussions and retweets. In this way, they tricked X’s recommendation algorithm into amplifying exposure for their posts and amassed large numbers of followers and influence.

Such a level of coordination between pseudo-agents online was unprecedented, with AI models being weaponized to create a new generation of social agents, far more sophisticated than social agents. Previous social robots. Machine learning tools to detect social bots, like ours Potometerthey were unable to distinguish between these AI agents and human accounts in the wild. Even AI models trained to detect AI-generated content failed.

Robots in the era of generative artificial intelligence

Fast forward a few years: Today, people and organizations with malicious intent have access to more powerful AI language models — including open source ones — while social media platforms have reduced or eliminated moderation efforts. They even offer financial incentives for engaging content, regardless of whether it is real or generated by artificial intelligence. This is a perfect storm for foreign and domestic influence operations targeting democratic elections. For example, a swarm of AI-controlled robots could create the false impression of widespread bipartisan opposition to a political candidate.

The current American administration has Take it apart Federal programs that fighting Such hostile campaigns and Its funding has been stopped research Efforts made to study it. Researchers I no longer have access To platform data that would make it possible to detect and monitor these types of online manipulation.

I am part of an interdisciplinary team from computer science, artificial intelligence, cybersecurity, psychology, social sciences, journalism and policy researchers who have sounded the alarm about… Threat of malicious AI swarms. We believe that current AI technology allows organizations with malicious intent to deploy large numbers of autonomous, adaptive, and coordinated agents on multiple social media platforms. These agents enable influence operations that are more scalable, sophisticated, and adaptive than simple scripted misinformation campaigns.

Instead of creating identical posts or obviously spam, AI agents can create a variety of, Widely trusted content. Crowds can send personalized messages to people according to their individual preferences and the context of their online conversations. Crowds can tailor tone, style, and content to dynamically respond to human interaction and platform signals like the number of likes or views.

Artificial consensus

In a study my colleagues and I conducted last year, we used a social media model to Simulate swarms of fake social media accounts Using different methods to influence the target community online. One tactic was by far the most effective: infiltration. Once an online group is infiltrated, malicious AI swarms can create the illusion of broad public agreement about the narratives they have been programmed to promote. This exploits a psychological phenomenon known as Social proof: Humans are naturally inclined to believe something if they realize that “everyone says it.”

Filippo Menzer and Kai Qingyang, CC BY-NC-ND

Like this social media Foxy grass tactics They’ve been around for many years, but malicious AI swarms can effectively create trusted interactions with targeted human users at scale, and have those users follow fake accounts. For example, agents can talk about the latest game for sports fans and current events for news junkies. They can generate language that matches the interests and opinions of their targets.

Even if individual allegations are debunked, the constant chorus of independent voices can make extremist ideas seem mainstream and amplify negative feelings toward “others.” Manufactured artificial consensus is a very real threat Public domainand the mechanisms that democratic societies use to form shared beliefs, make decisions, and trust in public discourse. If citizens cannot reliably distinguish between real public opinion and algorithmically generated simulations of consensus, democratic decision-making could be seriously compromised.

Risk mitigation

Unfortunately, there is no single solution. Regulation that gives researchers access to platform data would be a first step. Understanding how swarms behave collectively will be essential for anticipating risks. Detection of coordinated behavior It is the main challenge. Unlike simple copy-and-paste bots, malicious swarms produce diverse outputs that resemble normal human interaction, making them more difficult to detect.

In our lab, we design detection methods Patterns of coordinated behavior Which deviates from normal human interaction. Even if agents seem different from one another, their primary goals often reveal patterns in timing, network movement, and narrative trajectory that are unlikely to occur naturally.

Social media platforms can use such methods. I think AI and social media platforms should do that too More powerfully Adopting standards for applying watermarks to content generated by artificial intelligence and Identify and classify this content. Finally, restricting the monetization of inauthentic participation would reduce the financial incentives for influence operations and other malicious groups to use artificial consensus.

The threat is real

While these measures may mitigate the systemic risks posed by malicious AI swarms before they become entrenched in political and social systems around the world, the current political landscape in the United States appears to be moving in the opposite direction. The Trump administration has It aims to limit artificial intelligence and regulate social media It instead favors rapid deployment of AI models at the expense of safety.

The threat posed by malicious AI swarms is no longer theoretical: our evidence suggests that these tactics are already being deployed. I believe policymakers and technology experts must increase the costs, risks, and visibility of such manipulation.